One-class Logic Learning Machine¶

One-class classification problems can be used to define rules regarding a single class, where all, or almost all, of the data in the dataset belongs to the same class.

The generated rules either describe the class to which the dataset belongs (one-class) or the class to which the data does not belong (anomaly). These problems are performed in Rulex Factory with the One-Class Logic Learning Machine (LLM) task.

The task is divided into three tabs:

the Options tab, where you can set Basic and Advanced options for the analysis.

the Monitor tab, where you can visualize the results of the analysis.

the Results tab, where you can check the computation details.

The Options tab¶

The Options tab contains all the task’s features which can be customized to obtain the desired output.

It is divided into two smaller tabs, the Basic and the Advanced tab.

The Available attributes list is always available, no matter which tab is opened.

Basic tab

The Basic tab is divided in three sections: the Generate rules for section, the attribute drop area, and the basic options area.

The Generate rules for drop down list allows to set the problem type to be solved. Possible values are:

One-class (0): generates rules that fully characterize the dataset, with high covering and no errors.

Anomaly (1): generates rules that do not characterize the dataset, with low covering (zero covering is possible if the Also generate rules with zero covering option has been selected) and a high error percentage.

In the attribute drop area, the following panels are provided:

Input attributes: drag the attributes that will act as input attributes in generating classification rules when the task is computed. Instead of manually dragging and dropping attributes, they can be defined via a filtered list.

Key attributes: drag the key attributes. The task will create a different set of rules for each key attribute value. Instead of manually dragging and dropping attributes, they can be defined via a filtered list.

The following basic options are provided in the panel at the bottom of the screen:

Number of rules for each class (0 means ‘automatic’): if set to 0, the minimum number of rules required to cover all patterns in the training set is generated. Otherwise, set the desired number of rules for each class.

Minimum rule distance for additional rules: the minimum difference between additional rules, taken into consideration if the Prevent rules in input from being included in the LLM model option has been selected.

Maximum number of conditions for a rule: the maximum number of conditions in a rule.

Maximum nominal values: the maximum number of nominal values that can be contained in a condition. This is useful for simplifying conditions and making them more manageable, for example when an attribute has a very high number of possible nominal values. It is worth noting that overly complicated conditions also run the risk of over-fitting, where rules are too specific for the test data, and not generic enough to be accurate on new data.

Expected percentage of anomalies in the training set (%): the maximum percentage of anomalies the dataset should contain.

For one-class problems this percentage will correspond to the maximum percentage of errors, for anomalies to the maximum percentage of covering.Minimum interval for a condition on ordered attribute (%): the minimum interval for a condition on ordered attributes as a percentage of the total range.

- Consider relative error instead of absolute: specify whether the relative or absolute error must be considered.

The Expected percentage of anomalies in the training set % is set by considering proportions of samples belonging to different classes. Imagine a scenario where for given rule pertaining to the specific output value yo: TP is the number of true positives (samples with the output value yo that verify the conditions of the rule).

TN is the number of true negatives (samples with output values different from yo that do not verify the conditions of the rule).

FP is the number of false positives (samples with output values different from yo that do verify the conditions of the rule).

FN is the number of false negatives (samples with the output values yo that do not verify the conditions of the rule).

- Consider relative error instead of absolute: specify whether the relative or absolute error must be considered.

Prevent interval conditions for ordered attributes: if selected, interval conditions such as 1<x≤5 are avoided, and only conditions with > (greater than) ≤ (lower or equal than) are generated.

Advanced tab

In the Advanced tab, all the advanced task’s configuration options are provided:

Also generate rules with zero covering: if selected, it is possible to generate rules that do not cover any pattern of the training set. This option may be useful when generating rules for anomalies.

Prevent rules in input from being included in the LLM model: if selected, rules fed into the LLM task should not be included in the final ruleset.

Allow to use complements in conditions on nominal: if selected, conditions on nominal attributes can be expressed as complements.

Ignore outliers while building rules: if selected, the set of remaining patterns, not covered by generated rules, are ignored if its size is less than the threshold defined in the Expected percentage of anomalies in the training set (%) option.

Differentiate multiple rules by attributes: if selected, when multiple rules have to be generated, the presence of the same attributes in their conditions is penalized.

Change roles for input and output attributes: if selected, input and output roles defined in previous Data Manager tasks will be overwritten by those defined in this task.

Minimize number of conditions: if selected, rules with fewer conditions, but the same covering, are privileged.

Ignore attributes not present in rules: if selected, attributes that have not been included in rules will be flagged Ignore at the end of the training process, to reflect their redundancy in the classification problem at hand.

Hold all the generated rules: if selected, even redundant generated rules, which are verified only by training samples that already covered by other more powerful rules, are kept.

Aggregate data before processing: if selected, identical patterns are aggregated and considered as a single pattern during the training phase.

Missing values verify any rule condition: if selected, missing values will be assumed to satisfy any condition. If there is a high number of missing values, this choice can have an important impact on the outcome.

Perform a coarse-grained training (faster): if selected, the LLM training algorithm considers the conditions with the subset of values that maximizes covering for each input attribute. Otherwise, only one value at a time is added to each condition, thus performing a more extensive search. The coarse-grained training option has the advantage of being faster than performing an extensive search.

Percentage of negative patterns (%): the percentage of patterns which do not belong to the dataset class.

Append results: if selected, the results of this computation are appended to the dataset, otherwise they replace the results of previous computations.

Maximum number of trials in bottom-up mode: the number of times a bottom-up procedure can be repeated, after which a top-down procedure will be adopted.

The bottom-up procedure starts by analyzing all possible cases, defining conditions and reducing the extension of the rules. If, at the end of this procedure, the error is higher than the value entered for the Expected percentage of anomalies in the training set (%) option, the procedure starts again, inserting an increased penalty on the error. If the maximum number of trials is reached without obtaining a satisfactory rule, the procedure is switched to a top-down approach.Initialize random generator with seed: if selected, a seed, which defines the starting point in the sequence, is used during random generation operations. Consequently, using the same seed each time will make each execution reproducible. Otherwise, each execution of the same task (with same options) may produce dissimilar results due to different random numbers being generated in some phases of the process.

Overlap between rules (%): set the maximum percentage of patterns, which can be shared by two rules.

- Key usage mode: the possible values when one or more attributes are selected as keys:

Complete: considers all the possible combinations of key values evaluating resulting conditions on the whole training set;

Partial: makes this evaluation only on the portion of dataset having those key values.

Minimal: ensures that all the generated rules include at least one condition on key attributes.

The Monitor tab¶

The Monitor tab contains statistics on the generated rules as a set of histograms. These plots can be viewed during and after the computation.

This tab is divided itself into three tabs:

the Conditions tab

the Covering tab

the Error tab

The Conditions tab

The Conditions tab contains a bar plot displaying the following information:

On the X axis, you will find the number of conditions generated.

On the Y axis, you will find the counts of the rules containing the specified number of conditions.

the Color target, which can be found at the right of the plot, containing the output’s values. If you click on the output’s value, the plot displays the required information accordingly.

If you hover over the bars, you will find basic information on them, which generally consists of the Counts, the Range, the Color value, the Percentage on total, the Percentage on target, and the Percentage on bar.

The Covering tab

The Covering tab contains a bar plot displaying the following information:

On the X axis, you will find the covering percentage.

On the Y axis, you will find the counts of the rules with the corresponding percentage of covering.

the Color target, which can be found at the right of the plot, containing the output’s values. If you click on the output’s value, the plot displays the required information accordingly.

If you hover over the bars, you will find basic information on them, which generally consists of the Counts, the Range, the Color value, the Percentage on total, the Percentage on target, and the Percentage on bar.

The Error tab

The Error tab contains a bar plot displaying the following information:

On the X axis, you will find the error percentage.

On the Y axis, you will find the counts of the rules with the corresponding percentage of error.

the Color target, which can be found at the right of the plot, containing the output’s values. If you click on the output’s value, the plot displays the required information accordingly.

If you hover over the bars, you will find basic information on them, which generally consists of the Counts, the Range, the Color value, the Percentage on total, the Percentage on target, and the Percentage on bar.

The Results tab¶

The Results tab provides information on the computation. It is divided into two sections:

- In the General info section you will find:

The Task Label, which is the task’s name on the interface.

The Elapsed time, which indicates how long it took to complete the computation

- In the Result Quantities panel, you will find:

Average covering for a rule (%)

Average error for a rule

Average number of conditions in a rule

Maximum error for a rule

Maximum number of conditions in a rule

Minimum covering for a rule (%)

Minimum error for a rule

Minimum number of conditions in a rule

Number of attributes in rules

Number of distinct samples

Number of rules

Number of samples

You can check or uncheck the quantities above to visualize them in the results list. You can visualize their specific values by clicking on the arrow next to them.

Example¶

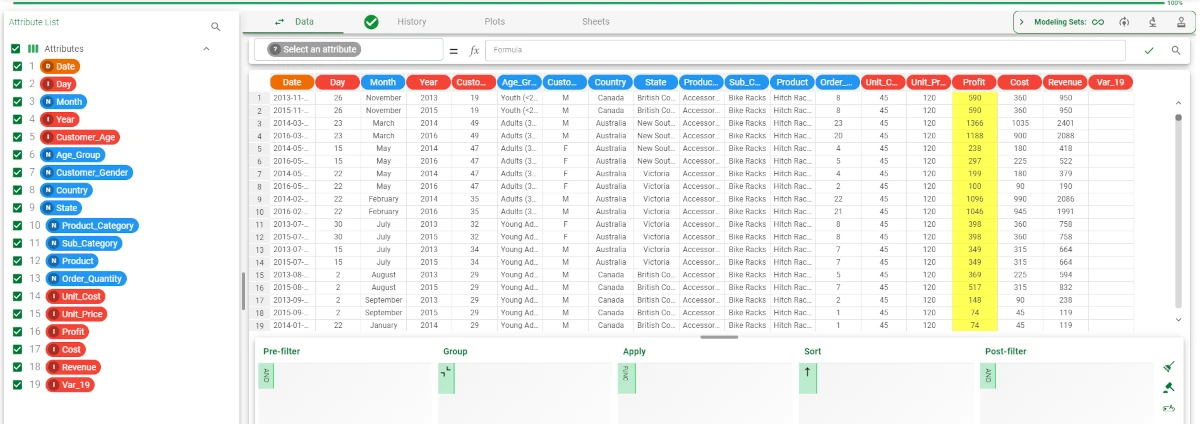

After having imported the dataset, add a Data Manager task and define the Profit attribute as Output.

Split the data into training (70%) and test (30%) set with the Split Data task, add a One-Class LLM task and link it to the Split Data task.

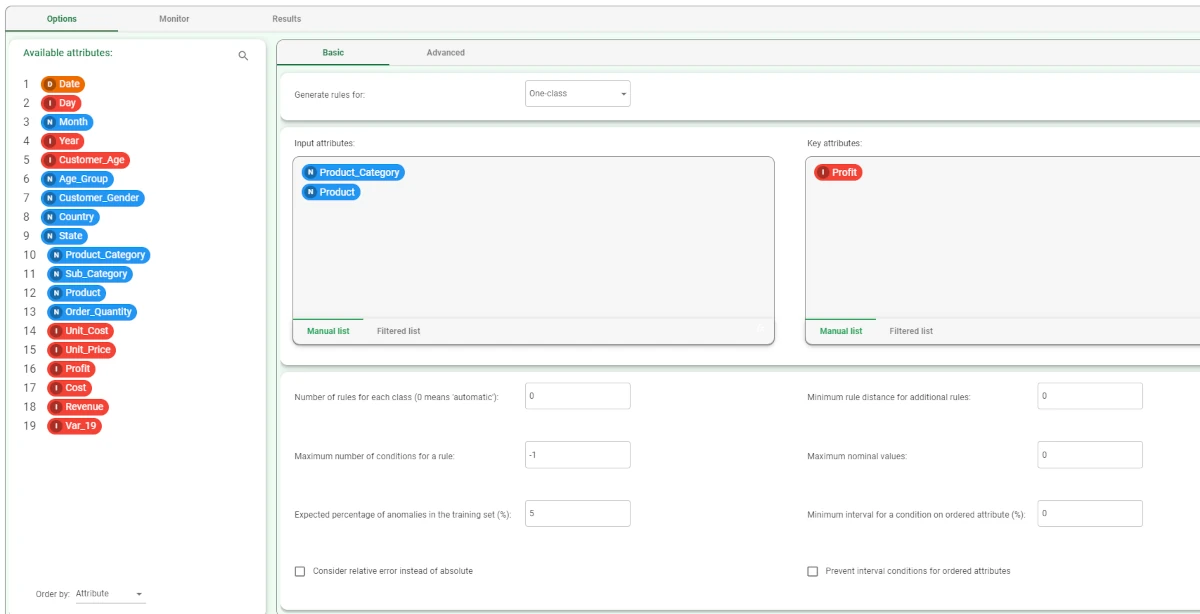

- Configure the One-Class LLM as follows:

Drag the Product_Category and the Product attribute onto the Input attributes area.

Drag the Profit attribute onto the Key attributes area.

Leave the other options as default and save and compute the task.