Neural Networks Regression¶

The Neural Network task creates a feed-forward neural network (NN). The NN model consists of a certain number of elementary perceptrons organised in layers. Each perceptron returns a sigmoidal function (activation function) that depends on a weighted sum of its input. A back-propagation algorithm is used to find the optimal weight vector for each perceptron. The output of the task is a model consisting of a weight matrix that can be used by an Apply Model task to perform the NN prediction on a set of examples.

The NN task is divided into four tabs:

The Options Tab, where users can choose the attribute they will work on and with.

The Monitor Tab, where users can view the properties of the new clusters.

The Weights Tab, where users can view the weight matrix for each hidden layer.

The Results Tab, where users can visualize the results.

The Options tab¶

The Options tab is divided into two tabs: the Basic tab and the Advanced tab.

In the Basic tab, users will find the following structure:

the Available attributes, where users will find the dataset’s attributes.

the Attributes drop area, where users can drag the chosen attributes.

one Customization pane where users are able to customize different options.

Available Attributes

Within this section, users will find a list of all the dataset’s attributes. To search for a specific attribute, use the lens icon at the top right of the pane. Users also have the option of sorting the attributes according to their preferences. They can choose from a drop-down list of the Order by option:

Attribute

Name

Type

Ignored

Role

Attribute drop area

The Attribute drop area is divided into two panes:

The Input attributes pane, where users can drag and drop the input attributes they want to use to create the classification of data. This operation can be done via a Manual List (users need to manually drag & drop the selected attributes onto the pane) or via a filtered list.

The Output attributes pane, where users can drag and drop the input attributes they want to use to build the network. This operation can be done via a Manual List (users need to manually drag & drop the selected attributes onto the pane) or via a filtered list.

Customization Panes

Within this pane, users can set the following options:

Number of hidden layers: the number of layers in a network.

Neurons for each hidden layer: the number of neurons in each hidden layer. This option is enabled only if the value specified for the Number of hidden layers option is greater than zero.

Learning rate: the coefficient of the gradient descent.

Momentum term: the momentum of the back-propagation algorithm.

Normalization for input attributes: the type of normalization to use when treating ordered (discrete or continuous) variables. Available values are:

None

Attribute

Normal

Minmax [0.1]

Minmax [-1,1]

Normalization for output attributes: the method for normalizing output variables. Available values are:

None

Attribute

Normal

Minmax [0.1]

Minmax [-1,1]

In the Advanced tab, users can set the following options:

Maximum number of iterations. It indicated the (overall) maximum number of iterations for the gradient descent algorithm.

Number of trials. It indicates the number of trials. (i.e. the repeated execution of back-propagation algorithm, with different starting conditions)

Maximum number of iterations for any trial. It indicates the maximum number of iterations allowed for the gradient descent algorithm for each trial.

Initialize random generator with seed. If selected, a seed which defines the starting point in the sequence, is used during random generation operations.

Use systemic activation function. If selected, use an activation function in the range (-1,1) instead of (0,1).

Append results. If selected, the results of this computation are appended to the dataset, otherwise they replace the results of previous computations.

Aggregate data before processing. If selected, identical patterns will be aggregated and considered as a single pattern during the evaluation process.

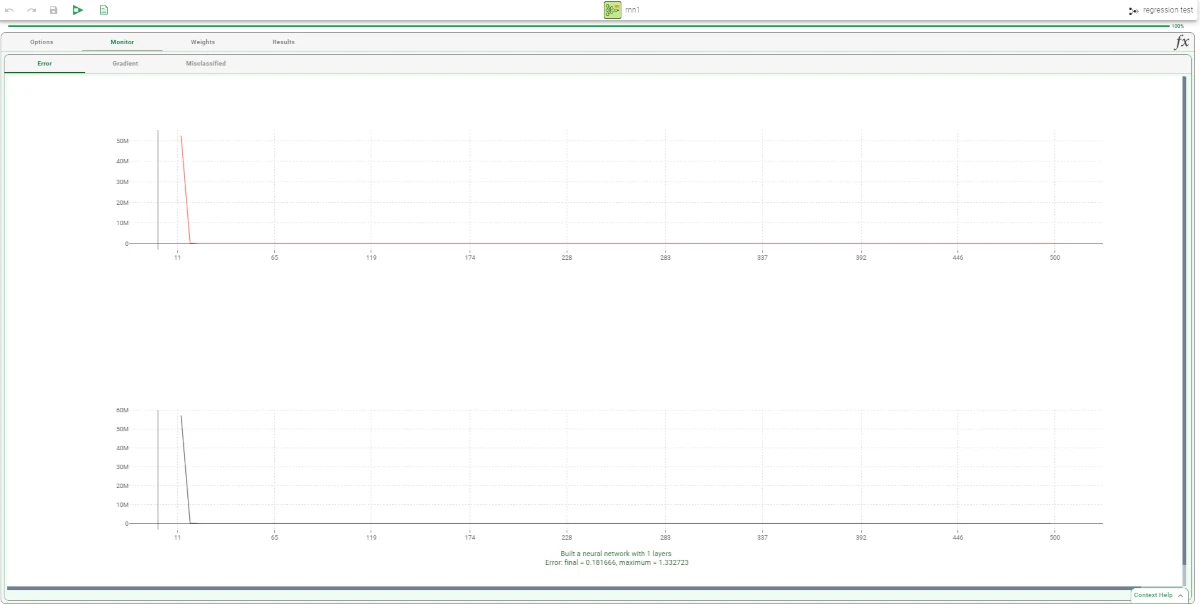

The Monitor tab¶

Within this tab, users can view the temporal evolution of some quantities related to the network optimization.

This tab is divided itself into three other tabs:

The Error Tab

The Gradient Tab

Error tab

Within this tab, users will see two plots. The plot above shows the mean errors on the training set. The neural network gradually changes the weights (i.e. the parameters of the NN) as it progresses, so that the value decreases. The plot below shows the maximum error; it takes the highest error (record) and monitors this error.

On the X axis: users will see the number of iterations.

On the Y axis: users will see the value of error.

Gradient tab

The gradient is a parameter used to indicate how the NN stabilizes. The lower it is, the more stabilized the parameters become.

On the X axis: users will see the number of iterations.

On the Y axis: users will see the value of gradient.

Missclassified tab

Within this tab, users can view the number of misclassified cases within the training set. The curve in the plot above generally starts at a certain level and then decreases to 0, while in more complex cases it may fluctuate. The curve in the plot below displays the minimum number of misclassifications.

On the X axis: users will see the number of iterations.

On the Y axis: users will see the value of misclassifications.

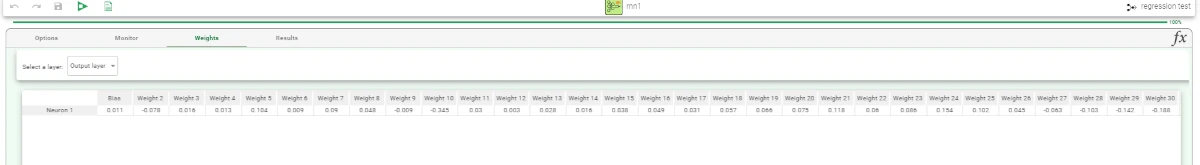

The Weights tab¶

This tab helps users to view the weight matrix for each hidden layer. The layer to be displayed can be selected from the Select a Layer drop-down list.

The Results tab¶

Within this tab, users can visualize a summary of the computation.

This tab is divided into two panes:

General Info

Within this pane, users can find the following information:

Task label

Elapsed time (sec)

Result Quantities

Within this pane, users can set and configure the following options:

Average number of iterations per trial

Final value of gradient

Number of layers

Number of neuronal units

Number of samples

Number of trials

number of weights (included biases)

Total number of iterations

These checkboxes are checked by default.

On the right of the above-mentioned checkboxes, users, through a drop-down list, will be able to visualize useful information:

Average number of iterations per trial: Iter.

Final value of gradient: Grad.

Number of layers: Layer, Unit, Weight, Trial, Iter.

Number of neuronal units: Layer, Unit, Weight, Trial, Iter.

Number of samples: Train, Test, Valid, Whole.

Number of trials: Layer, Unit, Weight, Trial, Iter.

Number of weights (included biases): Layer, Unit, Weight, Trial, Iter.

Total number of iterations: Layer, Unit, Weight, Trial, Iter.

Example¶

After having imported the dataset with the Import from Text File task, split the dataset into test and training sets (30% test and 70% training) with the Split Data task.

Then, add a Neural Networks Regression task to the flow.

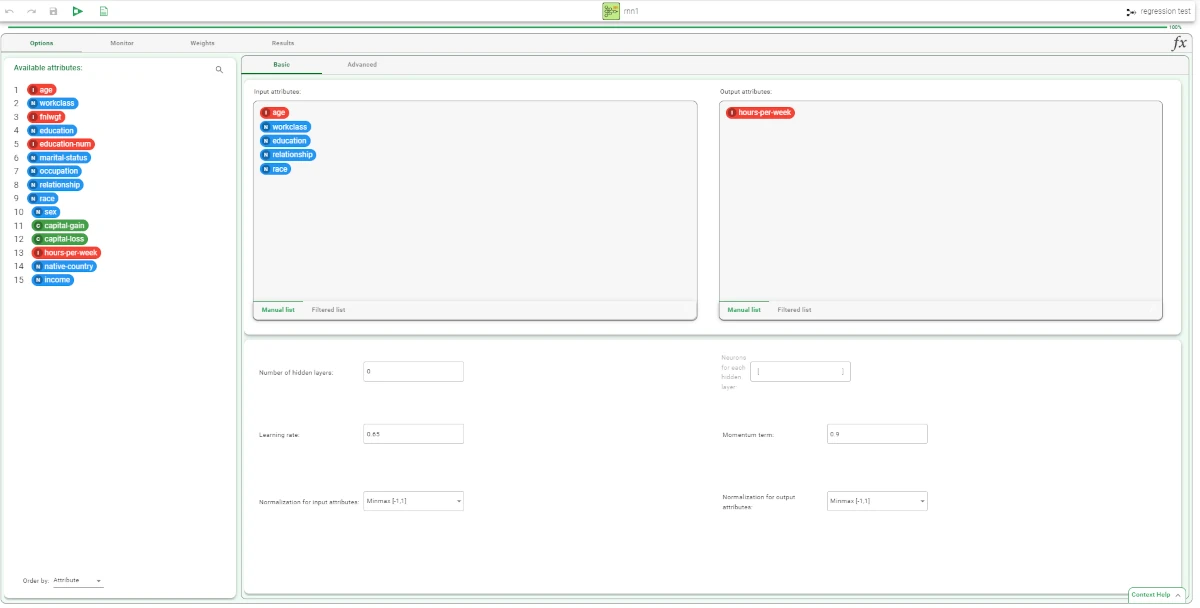

Configure the following options:

Number of hidden layers: 1

Neurons for each hidden layer: 5

Maximum number of iterations: 500

Maximum number of iterations for any trial: 500

Configure the following attributes:

Input attributes:

age

workclass

education

relationship

race

Output attributes: hours-per-week.

Save and compute the task.

The task can be checked in the Monitor tab of the NN task. Within this tab, users can visualize the values of Error, Gradient, and Misclassified by clicking on the corresponding tab.

The Weights tab displays the weights obtained through the optimization procedure. The layer to be visualized can be selected in the Select a layer drop-down list: Each row corresponds to a neuron of that layer and contains the weights relative to each input attribute.

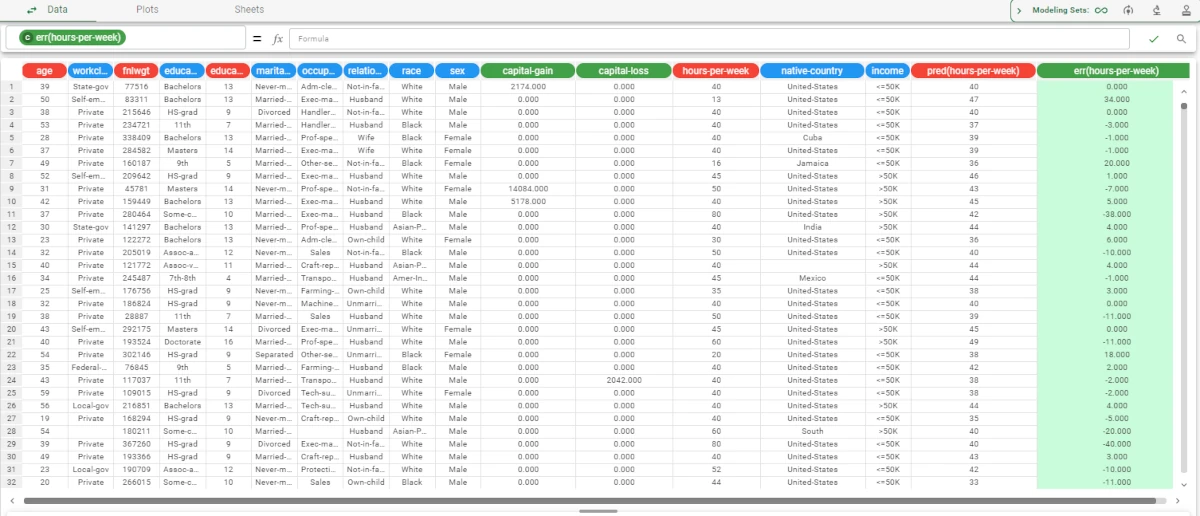

Add an Apply Model task to the NN task to apply the model to data. The forecast produced can be analyzed by right-clicking the Apply Model task and selecting Take a look.

In the data table, the following columns showing the results of the NN computation have been added:

the NN output forecast: pred(hours-per-week)

the error, i.e. the difference between the real output and the predicted output; if the actual output is missing, the field is left empty: err(hours-per-week)